RATINGS & REVIEWS

CONTEXT

Due to ASOS being an online only retailer customers are unable to try items on or assess the quality of an item in person before purchase. This therefore results in a high returns rate which is expensive for the business and inconvenient for the customer.

CUSTOMER PROBLEMS

Based on the jobs to be done research I'd carried out previously we knew that when shopping for clothing online some of the top unmet needs for customers were:

-

Determining what size they need

-

Finding items that suit them

-

Assessing the quality of an item

This was also corroborated by the returns data with "Doesn't fit properly" (57%) and "Doesn't suit me" (20%) being the top reasons for clothing, footwear and activewear returns.

Customer reviews was also one of the most requested features on our NPS survey and across social media.

BUSINESS PROBLEMS

The business would also benefit from reviews as currently they only received feedback on product quality and size & fit issues via returns, having this additional information would help the retail team improve the products in particular our own brands.

There was however initially a concern from the business that due to the fast sell through rate of the products on ASOS that by the time reviews were submitted the products would be sold out. However, we obtained data from the analytics team that showed that 29 weeks after a product is put live only 40% of clothing, footwear, and activewear is fully sold out.

99% SELL-THROUGH

OBJECTIVES

1. Improve customer confidence by removing barriers to purchase

2. Improve the customer's chance of finding the right product first time

3. Increase engagement with the ASOS brand

KPI's

PRIMARY KPIS

-

Increase conversion

-

Decrease returns rate

-

Increase visit frequency

SECONDARY KPIS

-

Review coverage

-

Review depth

RESEARCH

Competitor Analysis

We conducted an extensive competitor review looking at both fashion and beauty retailers to see what were the common themes and best practices, who was doing it well, and where there was opportunity for improvement.

Customer surveys

We ran several surveys with our customers to answer the following research questions:

-

How important is ratings & reviews on fashion & beauty products?

-

What product categories were the most important for reviews?

-

What information did customers want to see in reviews?

-

What information did they want to know about the reviewer?

-

What features were the most important for ratings & reviews?

-

How important was the product rating in comparison to other information on the product page?

-

Was the rating enough or did the customer also want to see a written review?

-

What impact does the rating itself have on the propensity for them to purchase the product?

WIREFRAMES & DESIGNS

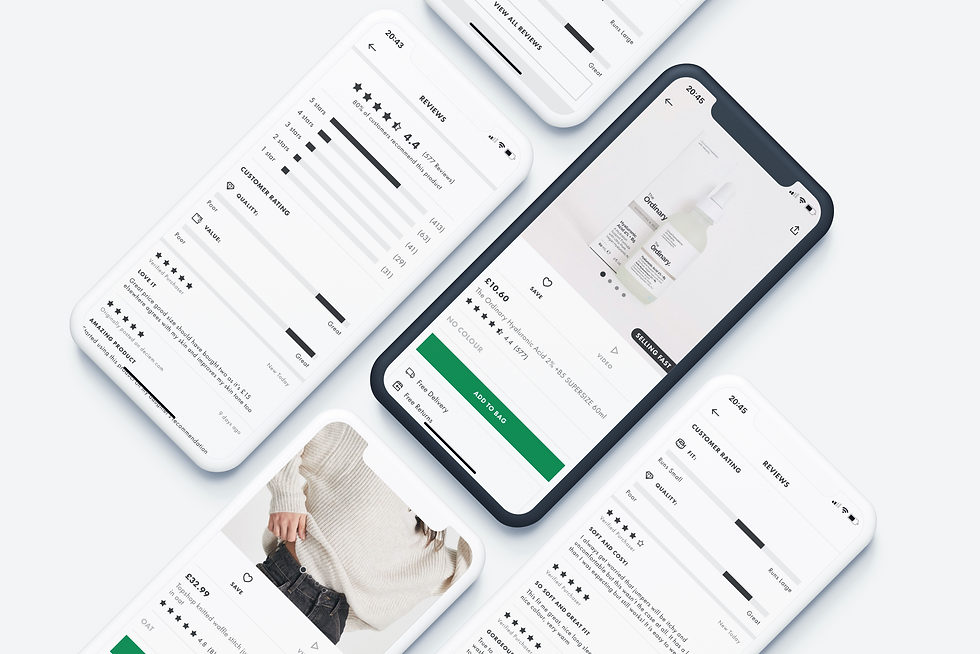

Initially we went wide coming up with lots of different ideas and solutions for how ratings & reviews could look on ASOS, which we then refined down through multiple rounds of user testing.

PREFERENCE TESTS

As we refined the designs down further we ran some preference tests to see which options customers preferred for the finer details of the experience.

HOW MANY DECIMAL PLACES TO DISPLAY

66% of participants preferred the rating displayed to one decimal place as they found it easier to understand, they thought it looked cleaner, and they felt that two decimal places was not necessary.

FUN ICONS OR KEEP IT CLEAN & SIMPLE

85% of participants preferred the rating bars with the icons as they found them easier to understand, visually appealing, more accessible, and it added an element of delight to the experience.

RATING ICON OR NUMBER OF STARS

80% of participants preferred seeing the rating with the 5 stars as they found it easier to understand, easier to scan & compare, and it was also a design pattern they were more familiar with.

EMPTY STARS OR NO STARS

75% of participants preferred seeing no stars rather than the empty stars as it was more obvious that the product hadn't received any reviews yet.

ANIMATIONS

I also looked at ways we could add elements of delight to the experience by exploring animations for the stars, rating, and ratings bars.

MVP & FEATURE DESIRABILITY

To help us determine which features were the most important for the MVP I ran a survey using the Kano model to help determine the desirability of different features. Within the survey customers were asked how they would feel if a particular feature was included, how they would feel if it was excluded, and how important that feature was to them.

FEATURES

RANKED IN ORDER OF DESIRABILITY

Based on the combined responses from the survey we were able to categorise each feature into one of four categories (Must have, Performance, Attractive and Indifferent) and rank them in order of desirability.

These results along with the engineers assessment on tech complexity helped us decide what features to include in the initial MVP.

Although displaying the rating on the category page was the top rated feature from a customers perspective, we decided to not include this for MVP as we wanted to wait until we had built up a higher coverage and depth of reviews and could A/B test what effect it would have showing the rating at that stage in the customer journey.

FINAL DESIGNS

OUTCOMES & FURTHER TESTING

The launch of ratings & reviews was really well received by our customers who loved seeing what other customers thought of the products and appreciated the additional information on size & fit, quality, and seeing pictures of customers wearing the items .

The initial analysis showed that products with a rating of 4.0-4.9 stars saw an increase in conversion of 0.77% and products that were rated 5 stars saw an increase in conversion of 1.94%. However, products rated less than 4 stars had a negative effect on conversion, although there was also a reduction in returns by 3.17% it was not enough to outweigh the overall drop in conversion. Therefore post-launch we iterated and experimented to try and find a balance between the customer and the business goals.

THRESHOLD OF 3

For some of our popular brands e.g. Nike, Adidas, Puma, and Reebok we were syndicating reviews which meant these products had a higher depth of reviews, we could see that the more reviews a product had the higher the average rating which in turn limited the negative impact of low ratings on conversion and these brands were benefiting from the introduction of reviews.

Due to the fact ratings & reviews had only just launched a lot of products only had 1 or 2 reviews, therefore we decided to test having a minimum threshold of 3 reviews before we displayed the rating & reviews on the product page, so that the customer had a more balanced view of the product.

We ran this as an A/B test which saw buying visitors increase by 0.69% and weekly incremental revenue increased by £428k.

NEXT STEPS

We will continue to experiment and improve the experience as well as introduce more of the features that didn't make it into the MVP.